Rose Lazuli - Production Devlog 2

Post by Rose Lazuli

The Problem: How do we Playtest?

This week, the team wanted to start playtesting so we could validate some ideas. While the team had been putting in good work, we'd all been working separately, and we wanted to make sure things came together well. As such, it was my job to make a playtest protocol. However, while I'd been testing narrative over the last few weeks, I wasn't totally sure what the best ways to test for the other elements would be. With such a wide array of elements, the testing would need to be more thorough in a lot of ways, and more structured because multiple people would be moderating it.

Identifying the Goal

To help narrow down into what questions we wanted to answer, I wrote a protocol document. I started by outlining the playtest goals:

- Ensure the core gameplay loop of picking up/throwing with the VR headset is meeting engagement expectations (repeating steps needed to complete minigame).

- Confirm core themes are being communicated effectively via gameplay, narrative, and setting.

- Ensure difference between “real world” and “vr” minigame versions have the correct balance of monotony and excitement to encourage players to use the headset.

- Verify input and controls are intuitive and engaging by looking for sources of confusion.

By identifying exactly what the playtest would be trying to answer and focus on, we were able to better scaffold the rest of the protocol.

Narrowing Playtesting Methods

Now that I know what we're looking to answer, this can help inform what methods we'll use to gather data. Most of these questions are qualitative, focusing on the player's feelings and understanding. As such, methods like telemetry or other objective game metrics won't suit the test very well. That being said, I still wanted to make sure we got a good mix of qualitative and quantitative data to review. Through this, I identified three tools that would be good to use:

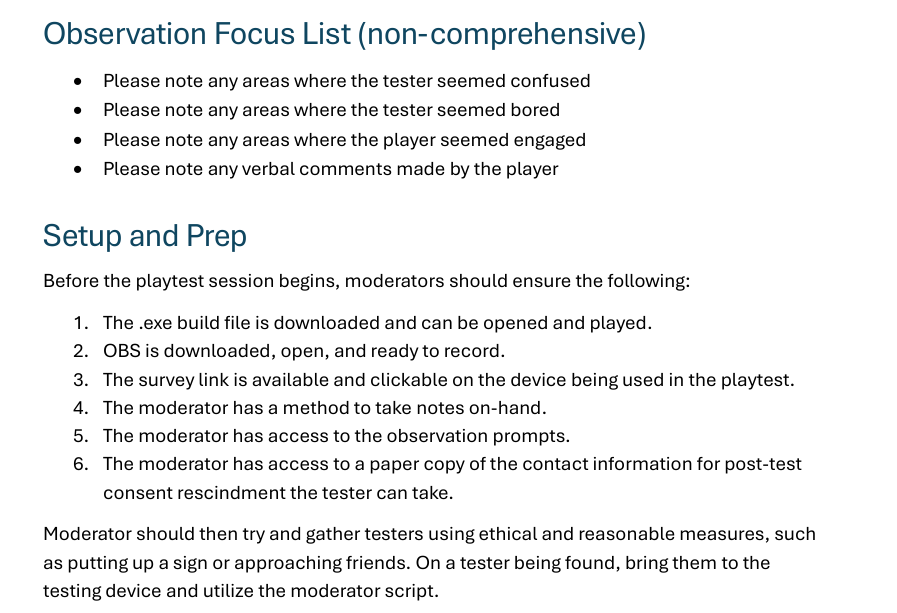

MODERATOR NOTES were selected as a good way to determine early impressions and identify moments of confusion or boredom. Initial reactions say a lot about how players are feeling, and getting a comprehensive list of these moments after can be difficult. By having the moderator looking out for important moments and reactions, we can get a very unfiltered understanding of where we might need to make adjustments to get the desired player response. To help keep moderators from taking notes on everything, I also provided some prompts for the moderator on what to look out for:

Please note any areas where the tester seemed confused, please note any areas where the tester seemed bored, please note any areas where the player seemed engaged, and please note any verbal comments made by the player.

VIDEO RECORDINGS were the next important choice. While moderator notes are good at catching important moments, sometimes humans can be imprecise or forget what a note means. This way, we have a log of the playtester's actions we can review and line up moderator notes with. This allows for a more thorough review of important moments and remove the potential for a faulty memory leading us in the wrong direction. OBS ended up being a solid choice for quickly recording gameplay sessions.

POST-SESSION SURVEY ended up being the last major tool needed. While moderators are good at catching moments of extreme emotions unfiltered, and video is a good objective record of what happened, neither allow for peering into the mind of the tester. Due to the heavily qualitative nature of the playtest focus, this seemed like an important addition. This ended up being the last major item on the list, filling in the major gap in focus for the test overall.

Building a Playtest Package

The next step was to put together a package the moderators could use to quickly set up, playtest, and reset. For this, 3 pieces of material were needed: the survey, the moderator script, and the build we were testing.

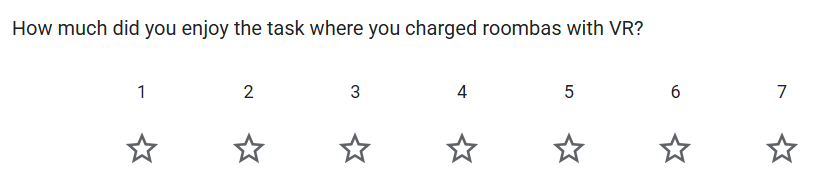

For the survey, I wanted to make sure we had a good mix of qualitative and quantitative response types. Converting emotions and impressions, which are inherently vague, into a 7-point ranking seemed like a strong start, allowing for an analysis of the impressions these testers were getting. However, allowing them to elaborate was also important, so answer boxes allowing them to justify that score was also included. For the focus of identifying themes, I left some open-ended short answer questions allowing the player to fill in their own answer, ensuring a lack of leading the tester to potential answers. By mixing various question types, I feel I ensured that the data we'd get would be informative on what steps to take next and where to revisit design elements.

Figure 1 - Quantitative metrics of vague things like enjoyment allow us to judge how effective our mechanics are.

For the moderator script, the main focus was on consent. I wanted to make sure testers were well aware of their ability to revoke consent, were given the tools necessary to do s, and to ensure that the consent was informed in every necessary way. As such, the script is a bit tedious, but clearly communicates the needed information to the tester to ensure the team's ethical obligations are met. To assist the moderator, I expanded the script to include the moderator note prompts, copies of contact information the tester can use to revoke consent at the later date, and the setup instructions. I called this a cheat sheet and believe it will help streamline the process for the moderator, as well as ensuring the relevant data is communicated to the tester.

The Build

Finally, the build. This was probably one of the most complicated elements of the playtest, as it needed to include all the elements we wanted to test. Unfortunately, due to illness we were unable to test the level design for this playtest. As such I identified some elements that needed to be included:

- A minigame with both a VR and non-VR gameplay version to test our design intent.

- A narrative text set to test if fuller game context would impact responses.

- The movement mechanic to allow you to navigate.

- The headset mechanic to ensure it was effective and intuitive.

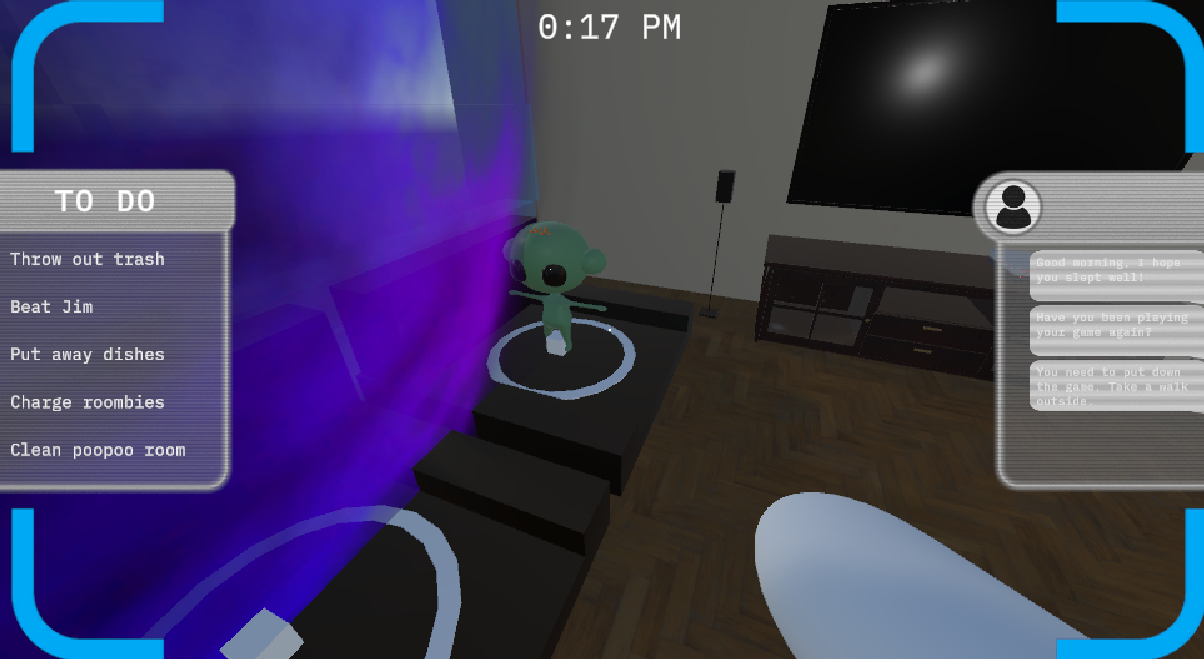

- The game UI to ensure it functioned and was legible to players.

- Sound effects to test our audio systems were working correctly.

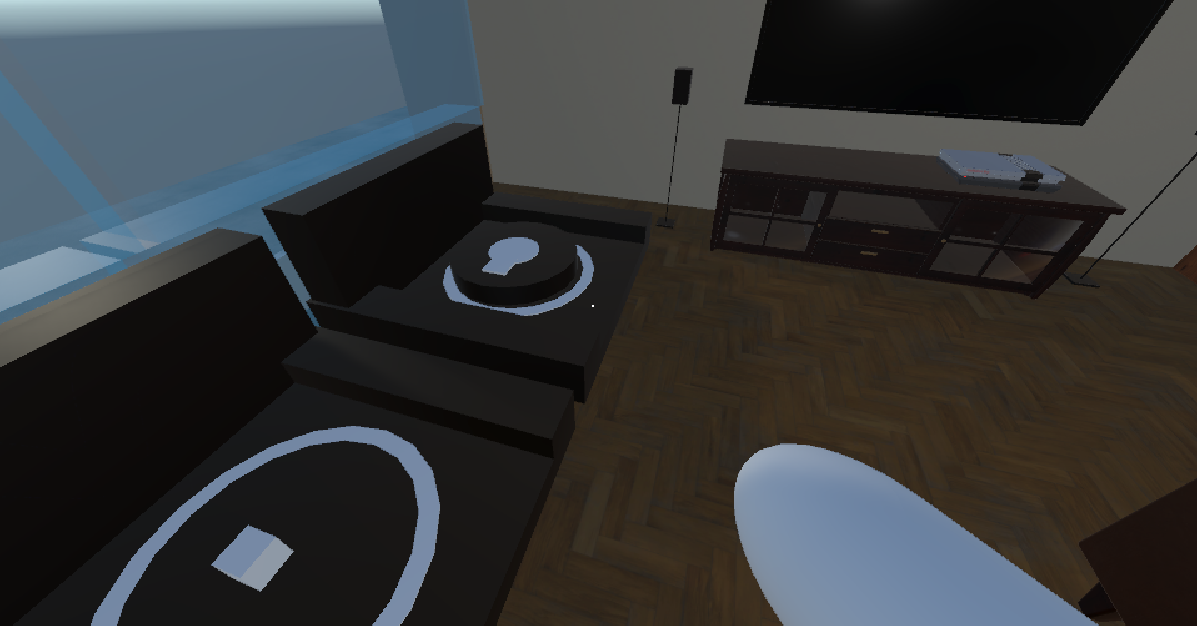

I used a preconstructed demo map for an asset pack and hollowed out a few rooms to use as a space. I ended up putting in the Trash minigame, where you pick up thrash and throw it in the bin, and the Roombas minigame, where you find roombas and throw them into their chargers, which both have VR versions of the game.

A narrative trigger was put in one of the side rooms to allow the player to discover it, but unfortunately did not end up being tied to minigame completion as was originally intended.

Headset and movement mechanics were imported from the team's work, and the UI was implemented into the headset mechanic. Finally, Jon, who was handling sound, implemented the audio. Braeden then went through for one last visual and aesthetics pass, adding some last minute polish, and then the build was ready for testing.

Conclusions

Building a playtest protocol is hard. Going from some vague ideas to a package you can have your team downloading and running quickly is intense and stressful. But by focusing in on a few specific areas, building your tools correctly, and working with your team to jigsaw a puzzle of disparate pieces together, it ends up being possible. A few questions to validate is important to keeping your focus narrow. Choosing tools to meet those questions appropriately is key. Those tools then inform what you need in your build to make sure moderators can test effectively A few basic steps, perhaps, but ones that take some time and effort to get right. I hope I managed to strike a good balance with this testing protocol, and will be watching closely to know how to handle any future protocols.

Because this time, we're testing the playtest, too.

Get My Ordinary AR Life

My Ordinary AR Life

| Status | Prototype |

| Authors | Braeden, Jonathan To, Arc Zhu, Naitoshadou, St2ele |

| Genre | Puzzle |

| Tags | Comedy, Puzzle-Platformer |

More posts

- Dev Log 2 - Virtual Environment Interactions11 days ago

- Devlog 2 - Audio Implementation12 days ago

- Devlog - Designing Minigames and Creating Assets15 days ago

- Dev Log 1 - Into Digital Virtual Reality30 days ago

- Devlog 1 - Making a Dialogue System34 days ago

- Devlog - Game Design and Art34 days ago

- Narrative Devlog 1: A New Model34 days ago

- Devlog 1 - Conducting Research for Game Design95 days ago

- Dev Log 3 – Presenting a Design and Getting Feedback95 days ago